Sat 22 Apr 2023

St. Petersburg, Russia

The Third International Conference on Code Quality (ICCQ) was a one-day computer science event focused on static and dynamic analysis, program verification, programming languages design, software bug detection, and software maintenance. ICCQ was organized in cooperation with IEEE Computer Society and St. Petersburg University.

Watch all presentations on YouTube and subscribe to our channel so that you don’t miss the next event!

The Proceedings of ICCQ were published by IEEE Xplore.

Keynote

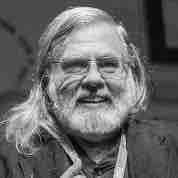

David West

David West

New Mexico Highlands University

Dr. West is the author of the famous Object Thinking book dedicated to the quality of object-oriented programming. He began his computing career as a programmer in 1968, the same year that software engineering became a profession. Since then, he delivered over a hundred papers and presentations at conferences including OOPSLA and journals including CACM. He has authored four books. Today he provides mentoring, training, coaching, and consulting services.

Program Committee

Andrey Terekhov (Chair)

Andrey Terekhov (Chair)

SPbU

And in alphabetical order:

Alexandre Bergel

Alexandre Bergel

University of Chile

Laura M. Castro

Laura M. Castro

Universidade da Coruña

Daniele Cono D’Elia

Daniele Cono D’Elia

Sapienza University of Rome

Pierre Donat-Bouillud

Pierre Donat-Bouillud

Czech Technical University

Bernhard Egger

Bernhard Egger

Seoul National University

Samir Genaim

Samir Genaim

Universidad Complutense de Madrid

Yusuke Izawa

Yusuke Izawa

Tokyo Institute of Technology

Ranjit Jhala

Ranjit Jhala

University of California, San Diego

ACM Fellow

Christoph Kirsch

Christoph Kirsch

University of Salzburg

Yu David Liu

Yu David Liu

Binghamton University

Wolfgang de Meuter

Wolfgang de Meuter

Vrije Universiteit Brussel

Antoine Miné

Antoine Miné

Sorbonne Université

Xuehai Qian

Xuehai Qian

University of Southern California

Junqiao Qiu

Junqiao Qiu

Michigan Technological University

Yudai Tanabe

Yudai Tanabe

Tokyo Institute of Technology

Tachio Terauchi

Tachio Terauchi

Waseda University

David West

David West

New Mexico Highlands University

Vadim Zaytsev

Vadim Zaytsev

University of Twente

Program / Agenda

Subscribe to our YouTube channel.

17:00 (Moscow time)

Yegor Bugayenko:

Opening

17:10

Andrey Terekhov:

PC Welcome Speech

17:20

David West:

What IS Code Quality: from “ilities” to QWAN

18:05

Rowland Pitts:

Mutant Selection Strategies in Mutation Testing

18:35

Mohamed Wiem Mkaouer:

Understanding Software Performance Challenges - An Empirical Study on Stack Overflow

19:05

Al Khan:

Machine Learning Analysis for Software Quality Test

19:35

Sergey Kovalchuk:

Test-based and metric-based evaluation of code generation models for practical question answering

20:05 Closing

All videos are in this playlist.

Keynotes and Invited Talks

What IS Code Quality: from “ilities” to QWAN

This talk will be a little bit of history, some philosophy, and some speculation; but, will mostly pose some questions. We will start with some historical highlights, like the “ilities,” or desiderata for quality code, before moving to philosophical questions about a spectrum of code quality and the criteria that might be used to place some given code on that spectrum; and end with speculation about whether or not code can, or should, exhibit the Quality Without A Name (QWAN) of Christopher Alexander. Along the way we will share some, hopefully, amusing and illustrative stories.

Accepted Papers

We received 13 submissions. 4 papers were accepted. Each paper received at least four reviews from PC members.

Mutant Selection Strategies in Mutation Testing

Mutation Testing offers a powerful approach to assess- ing unit test set quality; however, software developers are often reluctant to embrace the technique because of the tremendous number of mutants it generates, including redundant and equivalent mutants. Researchers have sought strategies to reduce the number of mutants without reducing effectiveness, and also ways to select more effective mutants, but no strategy has performed better than random mutant selection. Equivalent mutants, which cannot be killed, make achieving mutation adequacy difficult, so most research is conducted with the assumption that unkilled mutants are equivalent. Using 15 java.lang classes that are known to have truly mutation adequate test sets, this research demonstrates that even when the number of equivalent mutants is drastically reduced, they remain a tester’s largest problem, and that apart from their presence achieving mutation adequacy is relatively easy. It also assesses a variety of mutant selection strategies and demonstrates that even with mutation adequate test sets, none perform as well as random mutant selection.

Understanding Software Performance Challenges - An Empirical Study on Stack Overflow

Performance is a quality aspect describing how the software is performing. Any performance degradation will further affect other quality aspects, such as usability. Software developers continuously conduct testing to ensure that code addition or changes do not damage existing functionalities or negatively affect the quality. Hence, developers set strategies to detect, locate and fix the regression if needed. This paper provides an exploratory study on the challenges developers face in resolving performance regression. The study is based on the questions on a technical forum about performance regression. We collected 1828 questions discussing the regression of software execution time. All those questions are manually analyzed. The study resulted in a categorization of the challenges. We also discussed the difficulty level of performance regression issues within the developers’ community. This study provides insights to help developers during the software design and implementation to avoid regression causes.

Applying Machine Learning Analysis for Software Quality Test

One of the biggest expense in software development is the maintenance. Therefore, it’s critical to comprehend what triggers maintenance and if it may be predicted. Numerous research outputs have demonstrated that specific methods of assessing the complexity of created programs may produce useful prediction models to ascertain the possibility of maintenance due to software failures. As a routine it is performed prior to the release, and setting up the models frequently calls for certain, object-oriented software measurements. It’s not always the case that software developers have access to these measurements. In this paper, machine learning is applied on the available data to calculate the cumulative software failure levels. A technique to forecast a software’s residual defectiveness using machine learning can be looked into as a solution to the challenge of predicting residual flaws. Software metrics and defect data were separated out of the static source code repository. Static code is used to create software metrics, and reported bugs in the repository are used to gather defect information. By using a correlation method, metrics that had no connection to the defect data were removed. This makes it possible to analyze all the data without pausing the programming process. Large, sophisticated software’s primary issue is that it is impossible to control everything manually, and the cost of an error can be quite expensive. Developers may miss errors during testing as a consequence, which will raise maintenance costs. Finding a method to accurately forecast software defects is the overall objective.

Test-based and metric-based evaluation of code generation models for practical question answering

We performed a comparative analysis of code generation model performance with evaluation using common NLP metrics in comparison to a test-based evaluation. The investigation was performed in the context of question answering with code (test-to-code problem) and was aimed at applicability checking both ways for generated code evaluation in a fully automatic manner. We used CodeGen and GPTNeo pretrained models applied to a problem of question answering using Stack Overflow-based corpus (APIzation). For test-based evaluation, industrial test-generation solutions (Machinet, UTBot) were used for providing automatically generated tests. The analysis showed that the performance evaluation based solely on NLP metrics or on tests provides a rather limited assessment of generated code quality. We see the evidence that predictions with both high and low NLP metrics exist that pass and don’t pass tests. With the early results of our empirical study being discussed in this paper, we believe that the combination of both approaches may increase possible ways for building, evaluating, and training code generation models.

Partners

Academia:

Industry:

Organizers

These people were making ICCQ 2023:

Yegor

Yegor

Bugayenko (Chair)

Alexandra

Alexandra

Baylakova

Irina

Irina

Grashkina

Aleksandra

Aleksandra

Oligerova